The cloud also focuses on maximizing the effectiveness of the shared resources. Cloud resources are usually not only shared by multiple users but as well as dynamically re-allocated as per demand. This can work for allocating resources to users in different time zones. For example, a cloud computer facility which serves European users during European business hours with a specific application (e.g. email) while the same resources are getting reallocated and serve North American users during North America's business hours with another application (e.g. web server). This approach should maximize the use of computing powers thus reducing environmental damage as well, since less power, air conditioning, rackspace, and so on, is required for the same functions.

The term moving cloud also refers to an organization moving away from a traditional capex model (buy the dedicated hardware and depreciate it over a period of time) to the opex model (use a shared cloud infrastructure and pay as you use it)

The National Institute of Standards and Technology's definition of cloud computing identifies "five essential characteristics":

In the PaaS model, cloud providers deliver a

computing platform typically including operating system, programming language execution environment, database, and web server. Application developers can develop and run their software solutions on a cloud platform without the cost and complexity of buying and managing the underlying hardware and software layers. With some PaaS offers, the underlying computer and storage resources scale automatically to match application demand such that cloud user does not have to allocate resources manually.

Examples of PaaS include:

AWS Elastic Beanstalk,

Cloud Foundry,

Heroku,

Force.com,

EngineYard,

Mendix,

OpenShift,

Google App Engine,

AppScale,

Windows Azure Cloud Services,

OrangeScape and

Jelastic.

Software as a service (SaaS)

In the

business model using software as a service (SaaS), users are provided access to application software and databases. Cloud providers manage the infrastructure and platforms that run the applications. SaaS is sometimes referred to as "on-demand software" and is usually priced on a pay-per-use basis. SaaS providers generally price applications using a subscription fee.

In the SaaS model, cloud providers install and operate application software in the cloud and cloud users access the software from cloud clients. Cloud users do not manage the cloud infrastructure and platform where the application runs. This eliminates the need to install and run the application on the cloud user's own computers, which simplifies maintenance and support. Cloud applications are different from other applications in their scalability—which can be achieved by cloning tasks onto multiple

virtual machines at run-time to meet changing work demand.

[57] Load balancers distribute the work over the set of virtual machines. This process is transparent to the cloud user, who sees only a single access point. To accommodate a large number of cloud users, cloud applications can be

multitenant, that is, any machine serves more than one cloud user organization. It is common to refer to special types of cloud based application software with a similar naming convention:

desktop as a service, business process as a service,

test environment as a service, communication as a service.

The pricing model for SaaS applications is typically a monthly or yearly flat fee per user,

[58] so price is scalable and adjustable if users are added or removed at any point.

[59]

Proponents claim SaaS allows a business the potential to reduce IT operational costs by outsourcing hardware and software maintenance and support to the cloud provider. This enables the business to reallocate IT operations costs away from hardware/software spending and personnel expenses, towards meeting other goals. In addition, with applications hosted centrally, updates can be released without the need for users to install new software. One drawback of SaaS is that the users' data are stored on the cloud provider's server. As a result, there could be unauthorized access to the data.

Network as a service (NaaS)

A category of cloud services where the capability provided to the cloud service user is to use network/transport connectivity services and/or inter-cloud network connectivity services.

[60] NaaS involves the optimization of resource allocations by considering network and computing resources as a unified whole.

[61]

Traditional NaaS services include flexible and extended VPN, and bandwidth on demand.

[60] NaaS concept materialization also includes the provision of a virtual network service by the owners of the network infrastructure to a third party (VNP – VNO).

[62][63]

Cloud clients

Users access cloud computing using networked client devices, such as

desktop computers,

laptops,

tablets and

smartphones. Some of these devices -

cloud clients - rely on cloud computing for all or a majority of their applications so as to be essentially useless without it. Examples are

thin clients and the browser-based

Chromebook. Many cloud applications do not require specific software on the client and instead use a web browser to interact with the cloud application. With

Ajax and

HTML5 these

Web user interfaces can achieve a similar, or even better,

look and feel to native applications. Some cloud applications, however, support specific client software dedicated to these applications (e.g.,

virtual desktop clients and most email clients). Some legacy applications (line of business applications that until now have been prevalent in

thin client computing) are delivered via a screen-sharing technology.

Deployment models

Cloud computing types

Private cloud

Private cloud is cloud infrastructure operated solely for a single organization, whether managed internally or by a third-party and hosted internally or externally.

[2] Undertaking a private cloud project requires a significant level and degree of engagement to virtualize the business environment, and requires the organization to reevaluate decisions about existing resources. When done right, it can improve business, but every step in the project raises security issues that must be addressed to prevent serious vulnerabilities.

[64]

They have attracted criticism because users "still have to buy, build, and manage them" and thus do not benefit from less hands-on management,

[65]essentially "[lacking] the economic model that makes cloud computing such an intriguing concept".

[66][67]Comparison for SaaS

| Public cloud | Private cloud |

|---|

| Initial cost | Typically zero | Typically high |

|---|

| Running cost | Predictable | Unpredictable |

|---|

| Customization | Impossible | Possible |

|---|

| Privacy | No (Host has access to the data) | Yes |

|---|

| Single sign-on | Impossible | Possible |

|---|

| Scaling up | Easy while within defined limits | Laborious but no limits |

|---|

Public cloud

A cloud is called a 'Public cloud' when the services are rendered over a network that is open for public use. Technically there is no difference between public and private cloud architecture, however, security consideration may be substantially different for services (applications, storage, and other resources) that are made available by a service provider for a public audience and when communication is effected over a non-trusted network. Generally, public cloud service providers like Amazon AWS, Microsoft and Google own and operate the infrastructure and offer access only via Internet (direct connectivity is not offered).

[31]

Community cloud shares infrastructure between several organizations from a specific community with common concerns (security, compliance, jurisdiction, etc.), whether managed internally or by a third-party and hosted internally or externally. The costs are spread over fewer users than a public cloud (but more than a private cloud), so only some of the cost savings potential of cloud computing are realized.

[2]

Hybrid cloud

Hybrid cloud is a composition of two or more clouds (private, community or public) that remain unique entities but are bound together, offering the benefits of multiple deployment models.

[2] Such composition expands deployment options for cloud services, allowing IT organizations to use public cloud computing resources to meet temporary needs.

[68] This capability enables hybrid clouds to employ cloud bursting for scaling across clouds.

[2]

Cloud bursting is an application deployment model in which an application runs in a private cloud or data center and "bursts" to a public cloud when the demand for computing capacity increases. A primary advantage of cloud bursting and a hybrid cloud model is that an organization only pays for extra compute resources when they are needed.

[69]

Cloud bursting enables data centers to create an in-house IT infrastructure that supports average workloads, and use cloud resources from public or private clouds, during spikes in processing demands.

[70]

By utilizing "hybrid cloud" architecture, companies and individuals are able to obtain degrees of fault tolerance combined with locally immediate usability without dependency on internet connectivity. Hybrid cloud architecture requires both on-premises resources and off-site (remote) server-based cloud infrastructure.

Hybrid clouds lack the flexibility, security and certainty of in-house applications.

[71] Hybrid cloud provides the flexibility of in house applications with the fault tolerance and scalability of cloud based services.

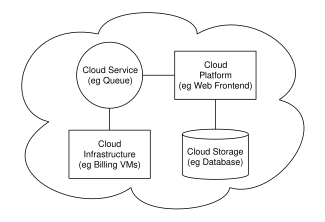

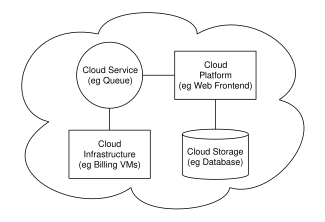

Architecture

Cloud computing sample architecture

Cloud architecture,

[72] the

systems architecture of the

software systems involved in the delivery of cloud computing, typically involves multiple

cloud componentscommunicating with each other over a loose coupling mechanism such as a messaging queue. Elastic provision implies intelligence in the use of tight or loose coupling as applied to mechanisms such as these and others.

The Intercloud

The Intercloud

[73] is an interconnected global "cloud of clouds"

[74][75] and an extension of the Internet "network of networks" on which it is based.

[76][77][78]

Cloud engineering

Cloud engineering is the application of

engineering disciplines to cloud computing. It brings a systematic approach to the high-level concerns of commercialisation, standardisation, and governance in conceiving, developing, operating and maintaining cloud computing systems. It is a multidisciplinary method encompassing contributions from diverse areas such as

systems,

software,

web,

performance,

information,

security,

platform,

risk, and

quality engineering.

Issues

Threats and opportunities of the cloud

However, cloud computing continues to gain steam

[80] with 56% of the major European technology decision-makers estimate that the cloud is a priority in 2013 and 2014, and the cloud budget may reach 30% of the overall IT budget.

[citation needed][81]

According to the

TechInsights Report 2013: Cloud Succeeds based on a survey, the cloud implementations generally meets or exceedes expectations across major service models, such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS)".

[82]

Several deterrents to the widespread adoption of cloud computing remain. Among them, are: reliability, availability of services and data, security, complexity, costs, regulations and legal issues, performance, migration, reversion, the lack of standards, limited customization and issues of privacy. The cloud offers many strong points: infrastructure flexibility, faster deployment of applications and data, cost control, adaptation of cloud resources to real needs, improved productivity, etc. The early 2010s cloud market is dominated by software and services in SaaS mode and IaaS (infrastructure), especially the private cloud. PaaS and the public cloud are further back.

Privacy

Privacy advocates have criticized the cloud model for giving hosting companies' greater ease to control—and thus, to monitor at will—communication between host company and end user, and access user data (with or without permission). Instances such as the

secret NSA program, working with

AT&T, and

Verizon, which recorded over 10 million telephone calls between American citizens, causes uncertainty among privacy advocates, and the greater powers it gives to telecommunication companies to monitor user activity.

[83][84] A cloud service provider (CSP) can complicate data privacy because of the extent of virtualization (virtual machines) and

cloud storage used to implement cloud service.

[85] CSP operations, customer or tenant data may not remain on the same system, or in the same data center or even within the same provider's cloud; this can lead to legal concerns over jurisdiction. While there have been efforts (such as

US-EU Safe Harbor) to "harmonise" the legal environment, providers such as Amazon still cater to major markets (typically the United States and the

European Union) by deploying local infrastructure and allowing customers to select "availability zones."

[86] Cloud computing poses privacy concerns because the service provider may access the data that is on the cloud at any point in time. They could accidentally or deliberately alter or even delete information.

[87]

Postage and delivery services company

Pitney Bowes launched Volly, a cloud-based, digital mailbox service to leverage its communication management assets. They also faced the technical challenge of providing strong data security and privacy. However, they were able to address the same concern by applying customized, application-level security, including encryption.

[88]

Compliance

To comply with regulations including

FISMA,

HIPAA, and

SOX in the United States, the

Data Protection Directive in the EU and the credit card industry's

PCI DSS, users may have to adopt

community or

hybriddeployment modes that are typically more expensive and may offer restricted benefits. This is how

Google is able to "manage and meet additional government policy requirements beyond FISMA"

[89][90] and Rackspace Cloud or QubeSpace are able to claim PCI compliance.

[91]

Many providers also obtain a

SAS 70 Type II audit, but this has been criticised on the grounds that the hand-picked set of goals and standards determined by the auditor and the auditee are often not disclosed and can vary widely.

[92] Providers typically make this information available on request, under

non-disclosure agreement.

[93][94]

Customers in the EU contracting with cloud providers outside the EU/EEA have to adhere to the EU regulations on export of personal data.

[95]

U.S. Federal Agencies have been directed by the Office of Management and Budget to use a process called FedRAMP (Federal Risk and Authorization Management Program) to assess and authorize cloud products and services. Federal CIO Steven VanRoekel issued a memorandum to federal agency Chief Information Officers on December 8, 2011 defining how federal agencies should use FedRAMP. FedRAMP consists of a subset of NIST Special Publication 800-53 security controls specifically selected to provide protection in cloud environments. A subset has been defined for the FIPS 199 low categorization and the FIPS 199 moderate categorization. The FedRAMP program has also established a Joint Accreditation Board (JAB) consisting of Chief Information Officers from DoD, DHS and GSA. The JAB is responsible for establishing accreditation standards for 3rd party organizations who perform the assessments of cloud solutions. The JAB also reviews authorization packages, and may grant provisional authorization (to operate). The federal agency consuming the service still has final responsibility for final authority to operate.

[96]

A multitude of laws and regulations have forced specific compliance requirements onto many companies that collect, generate or store data. These policies may dictate a wide array of data storage policies, such as how long information must be retained, the process used for deleting data, and even certain recovery plans. Below are some examples of compliance laws or regulations.

- In the United States, the Health Insurance Portability and Accountability Act (HIPAA) requires a contingency plan that includes, data backups, data recovery, and data access during emergencies.

- The privacy laws of the Switzerland demand that private data, including emails, be physically stored in the Switzerland.

- In the United Kingdom, the Civil Contingencies Act of 2004 sets forth guidance for a Business contingency plan that includes policies for data storage.

In a virtualized cloud computing environment, customers may never know exactly where their data is stored. In fact, data may be stored across multiple data centers in an effort to improve reliability, increase performance, and provide redundancies. This geographic dispersion may make it more difficult to ascertain legal jurisdiction if disputes arise.

[97]

Legal

As with other changes in the landscape of computing, certain legal issues arise with cloud computing, including trademark infringement, security concerns and sharing of proprietary data resources.

One important but not often mentioned problem with cloud computing is the problem of who is in "possession" of the data. If a cloud company is the possessor of the data, the possessor has certain legal rights. If the cloud company is the "custodian" of the data, then a different set of rights would apply. The next problem in the legalities of cloud computing is the problem of legal ownership of the data. Many Terms of Service agreements are silent on the question of ownership.

[99]

These legal issues are not confined to the time period in which the cloud based application is actively being used. There must also be consideration for what happens when the provider-customer relationship ends. In most cases, this event will be addressed before an application is deployed to the cloud. However, in the case of provider insolvencies or bankruptcy the state of the data may become blurred.

[97]

Vendor lock-in

Because cloud computing is still relatively new, standards are still being developed.

[100] Many cloud platforms and services are proprietary, meaning that they are built on the specific standards, tools and protocols developed by a particular vendor for its particular cloud offering.

[100] This can make migrating off a proprietary cloud platform prohibitively complicated and expensive.

[100]

Three types of vendor lock-in can occur with cloud computing:

[101]

- Platform lock-in: cloud services tend to be built on one of several possible virtualization platforms, for example VMWare or Xen. Migrating from a cloud provider using one platform to a cloud provider using a different platform could be very complicated.

- Data lock-in: since the cloud is still new, standards of ownership, i.e. who actually owns the data once it lives on a cloud platform, are not yet developed, which could make it complicated if cloud computing users ever decide to move data off of a cloud vendor's platform.

- Tools lock-in: if tools built to manage a cloud environment are not compatible with different kinds of both virtual and physical infrastructure, those tools will only be able to manage data or apps that live in the vendor's particular cloud environment.

Heterogeneous cloud computing is described as a type of cloud environment that prevents vendor lock-in, and aligns with enterprise data centers that are operating hybrid cloud models.

[102] The absence of vendor lock-in lets cloud administrators select his or her choice of hypervisors for specific tasks, or to deploy virtualized infrastructures to other enterprises without the need to consider the flavor of hypervisor in the other enterprise.

[103]

A heterogeneous cloud is considered one that includes on-premise private clouds, public clouds and software-as-a-service clouds. Heterogeneous clouds can work with environments that are not virtualized, such as traditional data centers.

[104] Heterogeneous clouds also allow for the use of piece parts, such as hypervisors, servers, and storage, from multiple vendors.

[105]

Cloud piece parts, such as cloud storage systems, offer APIs but they are often incompatible with each other.

[106] The result is complicated migration between backends, and makes it difficult to integrate data spread across various locations.

[106] This has been described as a problem of vendor lock-in.

[106] The solution to this is for clouds to adopt common standards.

[106]

Heterogeneous cloud computing differs from homogeneous clouds, which have been described as those using consistent building blocks supplied by a single vendor.

[107] Intel General Manager of high-density computing, Jason Waxman, is quoted as saying that a homogenous system of 15,000 servers would cost $6 million more in capital expenditure and use 1 megawatt of power.

Open source

Open standards

Most cloud providers expose APIs that are typically well-documented (often under a

Creative Commons license

[111]) but also unique to their implementation and thus not interoperable. Some vendors have adopted others' APIs and there are a number of open standards under development, with a view to delivering interoperability and portability.

[112] As of November 2012, the Open Standard with broadest industry support is probably

OpenStack, founded in 2010 by

NASA and

Rackspace, and now governed by the OpenStack Foundation.

[113] OpenStack supporters include

AMD,

Intel,

Canonical,

SUSE Linux,

Red Hat,

Cisco,

Dell,

HP,

IBM,

Yahooand now

VMware.

Security

As cloud computing is achieving increased popularity, concerns are being voiced about the security issues introduced through adoption of this new model. The effectiveness and efficiency of traditional protection mechanisms are being reconsidered as the characteristics of this innovative deployment model can differ widely from those of traditional architectures

] An alternative perspective on the topic of cloud security is that this is but another, although quite broad, case of "applied security" and that similar security principles that apply in shared multi-user mainframe security models apply with cloud security

The relative security of cloud computing services is a contentious issue that may be delaying its adoption. Physical control of the Private Cloud equipment is more secure than having the equipment off site and under someone else's control. Physical control and the ability to visually inspect data links and access ports is required in order to ensure data links are not compromised. Issues barring the adoption of cloud computing are due in large part to the private and public sectors' unease surrounding the external management of security-based services. It is the very nature of cloud computing-based services, private or public, that promote external management of provided services. This delivers great incentive to cloud computing service providers to prioritize building and maintaining strong management of secure services.Security issues have been categorised into sensitive data access, data segregation, privacy, bug exploitation, recovery, accountability, malicious insiders, management console security, account control, and multi-tenancy issues. Solutions to various cloud security issues vary, from cryptography, particularly public key infrastructure (PKI), to use of multiple cloud providers, standardisation of APIs, and improving virtual machine support and legal support.

Cloud computing offers many benefits, but is vulnerable to threats. As cloud computing uses increase, it is likely that more criminals find new ways to exploit system vulnerabilities. Many underlying challenges and risks in cloud computing increase the threat of data compromise. To mitigate the threat, cloud computing stakeholders should invest heavily in risk assessment to ensure that the system encrypts to protect data, establishes trusted foundation to secure the platform and infrastructure, and builds higher assurance into auditing to strengthen compliance. Security concerns must be addressed to maintain trust in cloud computing technology.

[1]

Sustainability

Although cloud computing is often assumed to be a form of

green computing, no published study substantiates this assumption.

[121] Citing the servers' effects on the environmental effects of cloud computing, in areas where climate favors natural cooling and renewable electricity is readily available, the environmental effects will be more moderate. (The same holds true for "traditional" data centers.) Thus countries with favorable conditions, such as Finland,

[122] Sweden and Switzerland,

[123] are trying to attract cloud computing data centers. Energy efficiency in cloud computing can result from energy-aware

scheduling and server consolidation.

[124] However, in the case of distributed clouds over data centers with different source of energies including renewable source of energies, a small compromise on energy consumption reduction could result in high carbon footprint reduction.

[125]

Abuse

As with privately purchased hardware, customers can purchase the services of cloud computing for nefarious purposes. This includes password cracking and launching attacks using the purchased services. In 2009, a banking

trojan illegally used the popular Amazon service as a command and control channel that issued software updates and malicious instructions to PCs that were infected by the malware.

IT governance

The introduction of cloud computing requires an appropriate IT governance model to ensure a secured computing environment and to comply with all relevant organizational information technology policies.As such, organizations need a set of capabilities that are essential when effectively implementing and managing cloud services, including demand management, relationship management, data security management, application lifecycle management, risk and compliance management. A danger lies with the explosion of companies joining the growth in cloud computing by becoming providers. However, many of the infrastructural and logistical concerns regarding the operation of cloud computing businesses are still unknown. This over-saturation may have ramifications for the industry as whole.

Consumer end storage

The increased use of cloud computing could lead to a reduction in demand for high storage capacity consumer end devices, due to cheaper low storage devices that stream all content via the cloud becoming more popular. In a Wired article, Jake Gardner explains that while unregulated usage is beneficial for IT and tech moguls like Amazon, the anonymous nature of the cost of consumption of cloud usage makes it difficult for business to evaluate and incorporate it into their business plans.

[131] The popularity of cloud and cloud computing in general is so quickly increasing among all sorts of companies, that in May 2013, through its company Amazon Web Services,

Amazon started a certification program for cloud computing professionals.

Ambiguity of terminology

Outside of the information technology and software industry, the term "cloud" can be found to reference a wide range of services, some of which fall under the category of cloud computing, while others do not. The cloud is often used to refer to a product or service that is discovered, accessed and paid for over the Internet, but is not necessarily a computing resource. Examples of service that are sometimes referred to as "the cloud" include, but are not limited to,

crowd sourcing,

cloud printing,

crowd funding,

cloud manufacturing.

Performance interference and noisy neighbors

Due to its multi-tenant nature and resource sharing, Cloud computing must also deal with the "noisy neighbor" effect. This effect in essence indicates that in a shared infrastructure, the activity of a virtual machine on a neighboring core on the same physical host may lead to increased performance degradation of the VMs in the same physical host, due to issues such as e.g. cache contamination. Due to the fact that the neighboring VMs may be activated or deactivated at arbitrary times, the result is an increased variation in the actual performance of Cloud resources. This effect seems to be dependent also on the nature of the applications that run inside the VMs but also other factors such as scheduling parameters and the careful selection may lead to optimized assignment in order to minimize the phenomenon.

[134]

Research

Many universities, vendors, Institutes and government organizations are investing in research around the topic of cloud computing:

- In October 2007, the Academic Cloud Computing Initiative (ACCI) was announced as a multi-university project designed to enhance students' technical knowledge to address the challenges of cloud computing.

- In April 2009, UC Santa Barbara released the first open source platform-as-a-service, AppScale, which is capable of running Google App Engine applications at scale on a multitude of infrastructures.

- In April 2009, the St Andrews Cloud Computing Co-laboratory was launched, focusing on research in the important new area of cloud computing. Unique in the UK, StACC aims to become an international centre of excellence for research and teaching in cloud computing and provides advice and information to businesses interested in cloud-based services.

- In October 2010, the TClouds (Trustworthy Clouds) project was started, funded by the European Commission's 7th Framework Programme. The project's goal is to research and inspect the legal foundation and architectural design to build a resilient and trustworthy cloud-of-cloud infrastructure on top of that. The project also develops a prototype to demonstrate its results.[139]

- In December 2010, the TrustCloud research project [140][141] was started by HP Labs Singapore to address transparency and accountability of cloud computing via detective, data-centric approaches[142] encapsulated in a five-layer TrustCloud Framework. The team identified the need for monitoring data life cycles and transfers in the cloud,[140] leading to the tackling of key cloud computing security issues such as cloud data leakages, cloud accountability and cross-national data transfers in transnational clouds.

- In July 2011, the High Performance Computing Cloud (HPCCLoud) project was kicked-off aiming at finding out the possibilities of enhancing performance on cloud environments while running the scientific applications - development of HPCCLoud Performance Analysis Toolkit which was funded by CIM-Returning Experts Programme - under the coordination of Prof. Dr. Shajulin Benedict.

- In June 2011, the Telecommunications Industry Association developed a Cloud Computing White Paper, to analyze the integration challenges and opportunities between cloud services and traditional U.S. telecommunications standards.[144]

- In December 2012, a study released by Microsoft and the International Data Corporation (IDC)showed that millions of cloud-skilled workers would be needed. Millions of cloud-related IT jobs are sitting open and millions more will open in the coming couple of years, due to a shortage in cloud-certified IT workers.

- In February 2013, the BonFIRE project launched a multi-site cloud experimentation and testing facility. The facility provides transparent access to cloud resources, with the control and observability necessary to engineer future cloud technologies, in a way that is not restricted, for example, by current business models.[145]

- In April 2013, A 2013 report by IT research and advisory firm Gartner., Inc. says that app developers will embrace cloud services, predicting that in three years, 40% of the mobile app development projects will use cloud backed services. Cloud mobile backed services offer a new kind of PaaS, used to enable the development of mobile apps.

Early references in popular culture

In the 1966

Star Trek episode

"Miri," Dr. McCoy, while stationed planetside, uses the computer of the orbiting

Enterprise to process the data gathered by his portable equipment.